2. Self Attention Usage

# 2. Self Attention Usage

# 2.1. Paper

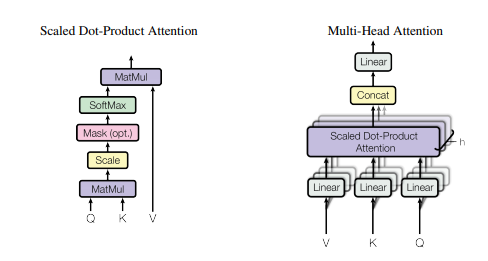

"Attention Is All You Need" (opens new window)

# 1.2. Overview

# 1.3. Usage Code

from model.attention.SelfAttention import ScaledDotProductAttention

import torch

input=torch.randn(50,49,512)

sa = ScaledDotProductAttention(d_model=512, d_k=512, d_v=512, h=8)

output=sa(input,input,input)

print(output.shape)